Introduction

This program is focused on building wide-field, video-rate, gigapixel cameras in small, low-cost form factors. Traditional monolithic lens designs, must increase f/# and lens complexity and reduce field of view as image scale increases. In addition, traditional electronic architectures are not designed for highly parallel streaming and analysis of large scale images. The AWARE W...

Introduction

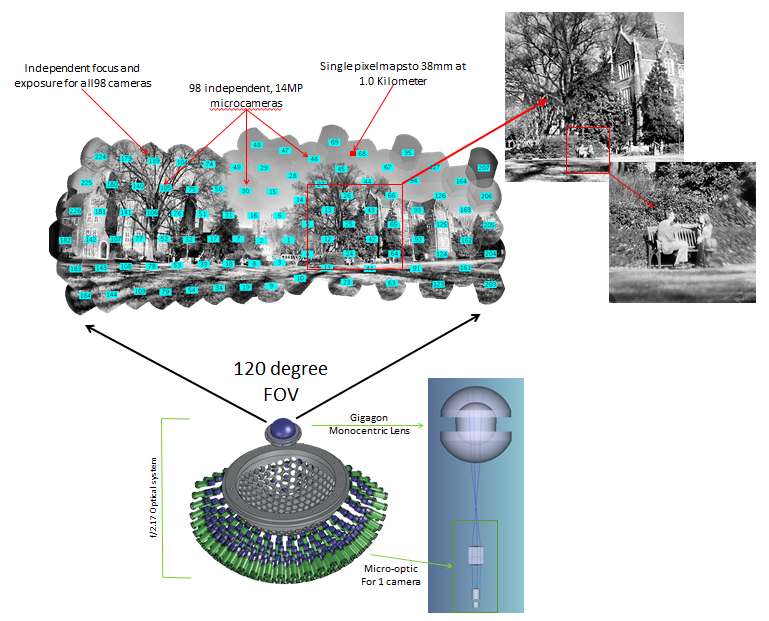

This program is focused on building wide-field, video-rate, gigapixel cameras in small, low-cost form factors. Traditional monolithic lens designs, must increase f/# and lens complexity and reduce field of view as image scale increases. In addition, traditional electronic architectures are not designed for highly parallel streaming and analysis of large scale images. The AWARE Wide field of view project addresse these challenges using multiscale designs that combine a monocentric objective lens with arrays of secondary microcameras.

The optical design explored here utilizes a multiscale design in conjunction with a monocentric objective lens to achieve near diffraction limited performance throughout the field. A monocentric objective enables the use of identical secondary systems (referred to as microcameras) greatly simplifying design and manufacturing. Following the multiscale lens design methodology, the field-of-view (FOV) is increased by arraying microcameras along the focal surface of the objective. In practice, the FOV is limited by the physical housing. This yields a much more linear cost and volume versus FOV. Additionally, each microcamera operates independently, offering much more flexibility in image capture, exposure, and focus parameters. A basic architecture is shown below, producing a 1.0 gigapixel image based on 98 micro-optics covering a 120 by 40 degree FOV.

Design Methodology

Optics

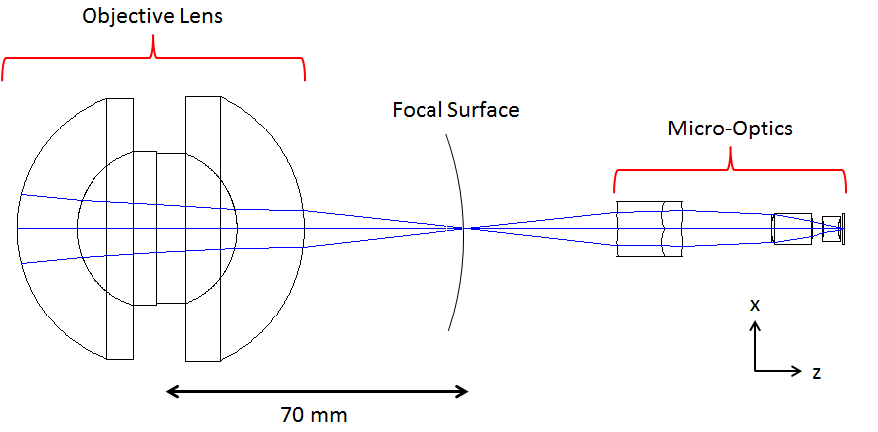

Multiscale lens design is an attempt at severing the inherent connection between geometric aberrations and aperture size that plagues traditional lenses. By taking advantage of the superior imaging capabilities of small scale optics, a multiscale lens can effectively increase its field-of-view and image size by simply arraying additional optical elements, similar to a lens array. The resulting partial images can then be stitched during post-processing to create a single image of a large field. An array of identical microcameras is used to synthesize a curved focal plane, with micro-optics to locally flatten the field onto a standard detector.

It is increasingly difficult to achieve diffraction-limited performance in an optical instrument as the entrance aperture increases in size. Scaling the size of an optical system to gigapixels also scales the optical path difference errors and the resulting aberrations. Because of this, larger instruments require more surfaces and elements to produce diffraction-limited performance. Multiscale designs are a means of combating this escalating complexity. Rather than forming an image with a single monolithic lens system, multiscale designs divide the imaging task between an objective lens and a multitude of smaller micro-optics. The objective lens is a precise but simple lens that produces an imperfect image with known aberrations. Each micro-optic camera relays a portion of the microcamera image onto its respective sensor correcting for the objective aberrations and forms a diffraction-limited image. Because there are typically hundreds or thousands of microcameras per objective, the microcamera optics are much smaller and therefore easier and cheaper to fabricate. The scale of the microcameras are typically those of plastic molded lenses, enabling mass production of complex aspherical shapes and therefore minimizing the number of elements. An example optical layout is modeled in the figure below.

Electronics

The electronics subsystem reflects the multiscale optical design and has been developed to scale to an arbitrary number of microcameras. The focus and exposure parameters of each camera are independently controlled and the communications architecture optimized to minimize the amount of transmitted data. The electronics architecture is designed to support multiple simultaneous users and is able to scale the output bandwidth depending on application requirements.

In the current implementation, each microcamera includes a 14 megapixel focal plane, focus mechanism, and a HiSpi interface for data transmission. An FPGA-based camera control module provides an interface to provide local processing and data management. The control modules communicate over ethernet to an external rendering computer. Each module connects to two microcameras and is used to sync image collection, scale images based on system requirements, and implement basic exposure and focus capabilities for the microcameras.

Image Formation

The image formation process generates a seamless image from the microcameras in the array. Since each camera operates independently, this process must account for alignment, rotation, illumination discrepancies between the microcameras. To approach real-time compositing, a forward model based on the multiscale optical design is used to map individual image pixels into a global coordinate space. This allows display scale images to be stitched multiple frames per second independent of model corrections, which can happen at a significantly slower rate.

The current image formation process supports two functional modes of operation. In the "Live-View" mode, the camera generates a single display-scale image stream by binning information at sensor level to minimize the transmission bandwidth and then performing GPU based compositing on a display computer. This mode allows users to interactively explore events in the scene in realtime. The snapshot mode captures a full dataset in 14 seconds and stores the information for future rendering and analysis.

Flexibility

A major advantage of this design is that it can be scaled. Except for slightly different surface curvatures, the same microcamera design suffices for 2, 10, and 40 gigagpixel systems. FOV is also strictly a matter of adding more cameras, with no change in the objective lens or micro-optic design.

Current Prototype System

Currently, a two-gigapixel prototype camera has been built and is shown below. This system is capable of a 120 degree circular FOV with 226 microcameras, 38 microradian FOV for a single pixel, and an effective f-number of 2.17. Each microcamera operates at 10 fps at full resolution. The optical volume is about 8 liters and the total enclosure is about 300 liters. The optical track length from the first surface of the objective to the focal plane is 188 mm.

Construction

AWARE-2 was constructed by an academic/industrial consortium with significant contributions from more than 50 graduate students, researchers and engineers. Duke University is the lead institution and led the design and manufacturing team. The lens design team is led by Dan Marks at Duke and included lens designers from the University of California San Diego (Joe Ford and Eric Tremblay) and from Rochester Photonics and Moondog Optics. The electronic architecture team is led by Ron Stack at Distant Focus Corporation. The University of Arizona team lead by Mike Gehm is responsible for image formation, the image data pipeline and stitching. Raytheon Corporation is responsible for applications development. Aptina is our focal plane supplier and is supplying embedded processing components. Altera supplies field programable gate arrays. The construction process is illustrated in timelapse video below.

Future Systems

The AWARE-10 5-10 gigapixel camera is in production and will be on-line later in 2012. Significant improvements have been made to the optics, electronics, and integration of the camera. Some are described here: Camera Evolution. The goal of this DARPA project is to design a long-term production camera that is highly scalable from sub-gigapixel to tens-of-gigapixels. Deployment of the system is envisioned for military, commercial, and civilian applications.

People and Collaborators

This project is a collaboration with Duke University, University of Arizona, University of California San Diego, Aptina, Raytheon, RPC Photonics, and Distant Focus Corporation. The AWARE project is led at DARPA by Dr. Nibir Dhar. The seeds of AWARE began at DARPA through the integrated sensing and processing model developed by Dr. Dennis Healy.